Zhen Fan

Research Vision

My research aims to build simulation-driven, knowledge-enhanced embodied AI systems that can operate reliably in complex industrial environments.

Modern robots excel at perception or control in isolation, but they still struggle with data scarcity, semantic understanding, and transparent decision-making—all of which are critical for real-world deployment.

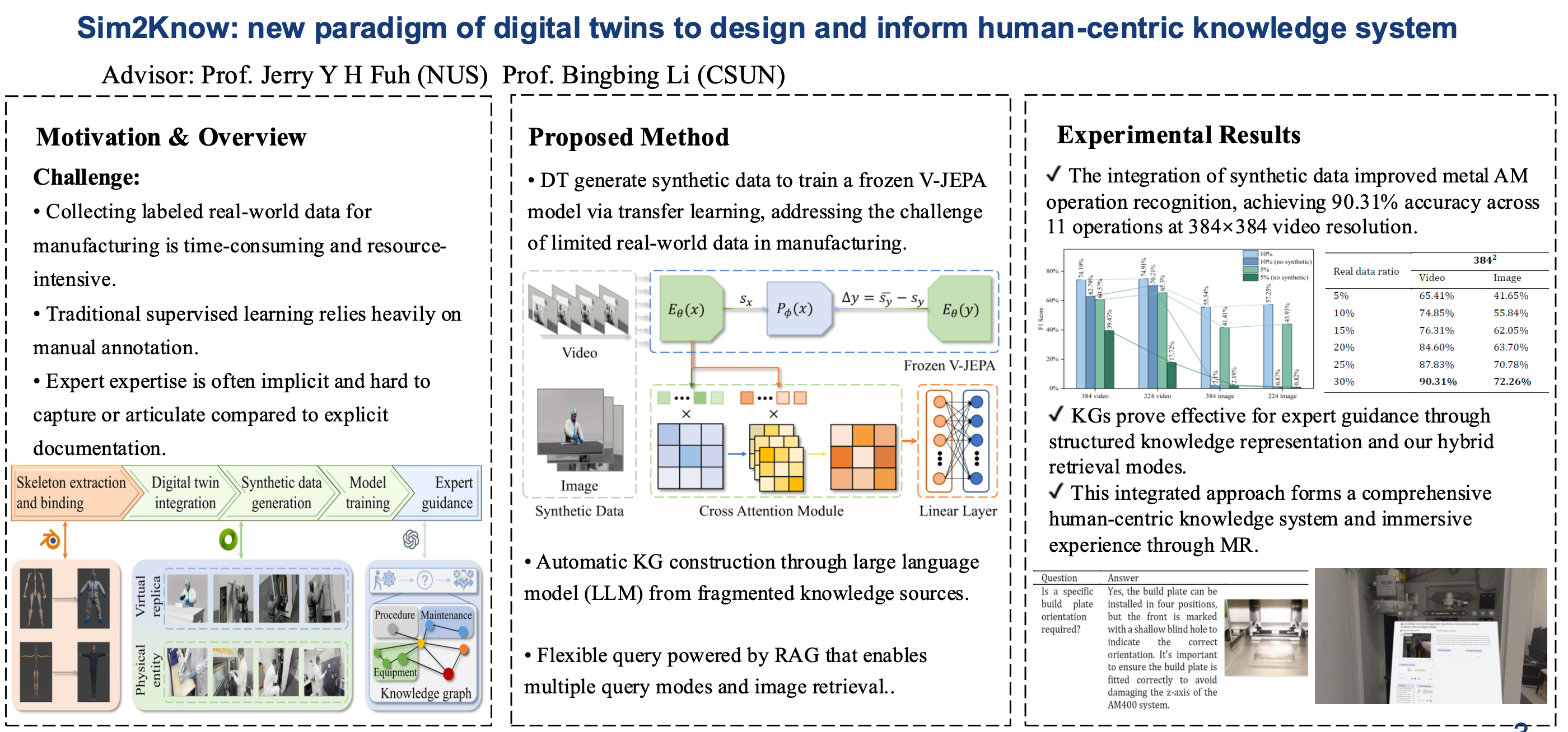

1. Simulation as a Source of Scalable Experience

High-fidelity Digital Twins and world models (e.g., NVIDIA Omniverse, COSMOS) provide a controllable environment for generating diverse multimodal data.

My work focuses on using these simulations to train perception and action models that transfer effectively to the real world (e.g., achieving 90.31% accuracy in factory validation).

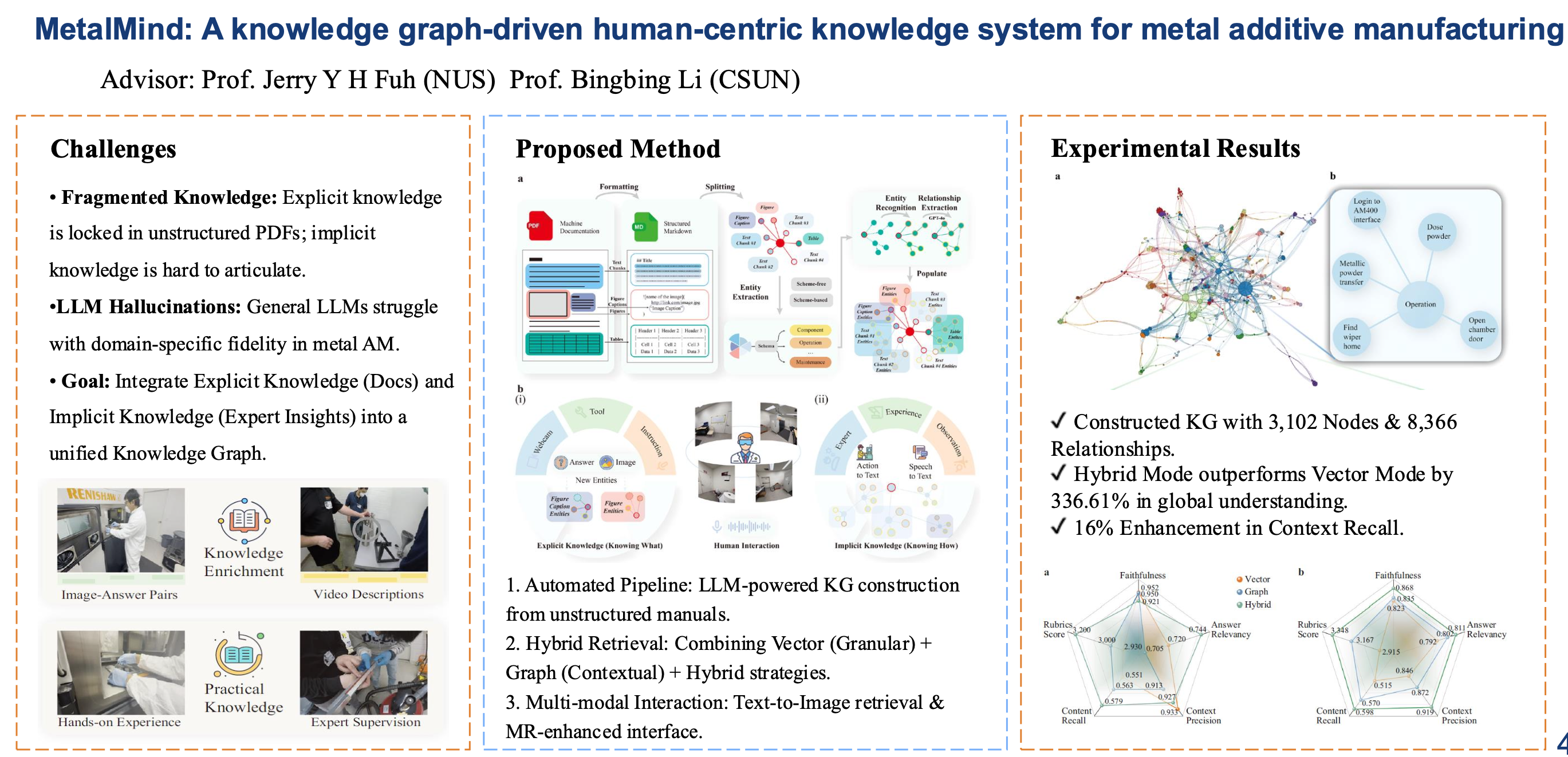

2. Structured Knowledge for Interpretation and Reasoning

Industrial domains contain rich but unstructured knowledge—spanning manuals, procedures, and expert insights.

I extract and organize this knowledge using LLM-based NER, Knowledge Graphs, and RAG pipelines. This enables embodied agents to perform context-aware and explainable reasoning rather than relying purely on statistical learning.

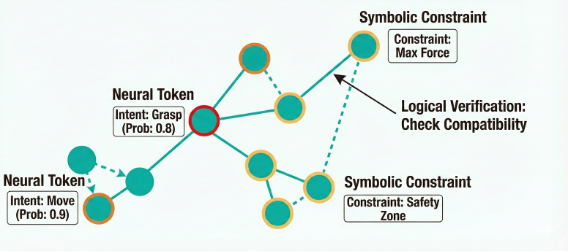

3. Toward Explainable and Human-Aligned Embodied Intelligence

By combining simulation-based learning with structured knowledge, my goal is to develop embodied systems that can:

- Understand human intent within industrial contexts.

- Justify their decisions through transparent reasoning paths.

- Follow strict safety and operational constraints.

- Collaborate effectively with human operators.

This forms the foundation for Human–Robot Collaboration (HRC) that is interpretable, safe, and adaptable—moving beyond pattern recognition toward knowledge-grounded, real-world-capable intelligence.